For decades, marketing leaders measured digital visibility through rankings, traffic, and share of voice.

Those metrics worked in a search engine-dominated landscape.

But generative engines like ChatGPT, Perplexity, and Google’s AI Overviews have changed how people discover information, and how brands need to measure success.

Traditional dashboards no longer tell the full story. CMOs now need AI discoverability scorecards: a framework for benchmarking visibility across generative platforms.

Why Generative Engines Require New KPIs

Generative search doesn’t display ten blue links like in traditional search.

Instead, it generates summaries, cites a handful of sources, and often shapes the customer’s perception before they ever visit a website.

That creates new challenges for measurement:

- Visibility is based on citations, not rankings

- Context matters as much as the citation itself

- Competitor brands can gain ground without ever outranking you in Google

If your dashboards don’t account for generative engines, you’re missing where customers are already making decisions.

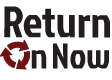

The Core Elements of an AI Discoverability Scorecard

A scorecard provides CMOs with a structured way to track progress.

Key performance indicators (KPIs) include:

Definition: The percentage of generative answers that cite or reference your brand, as compared to competitors.

Example: If you appear in 25-out-of-100 queries related to your category in Perplexity, your citation share is 25%. If your top competitor appears 40 times, you have a gap to close.

How to Measure: Record citations for a list of core queries weekly or monthly. Benchmark against your top 2–3 competitors.

Engine Coverage

Definition: How broadly your brand is visible across generative engines.

Example: You might dominate Google AI Overviews but show up rarely in Perplexity or Bing Copilot. That uneven coverage is a risk.

How to Measure: Track mentions across engines by query set. Create a coverage percentage (number of engines where you appear ÷ total engines tracked).

Content Type Influence

Definition: The content formats most likely to trigger citations in AI answers.

Example: If the various platforms cite your FAQs and how-to guides 70% of the time, while blog posts rarely surface, you know where to invest.

How to Measure: Categorize citations by content type. Look for trends over time to see which formats AI models trust most.

Entity Strength

Definition: How consistently AI engines recognize your brand and related topics as entities.

Example: If “Return On Now” is always labeled as a marketing consultancy in citations, entity strength is high. However, if AI models confuse our brand with unrelated companies, strength is weak.

How to Measure: Review citations for consistency. Monitor knowledge graph entries, structured data, and schema alignment.

Attribution Quality

Definition: The depth of brand presence in generative citations.

Example: A citation that includes your brand name, logo, and clickable link is stronger than a passing text mention with no link.

How to Measure: Classify each citation as strong (linked), medium (named), or weak (implied). Track the percentage breakdown.

Turning Data Into Action

If all you focus on is tracking KPIs, you’re overlooking half of the equation.

A scorecard gives you a baseline and reveals where to act:

- Content gaps: Where LLMs cite competitors but not you

- Format opportunities: Content types that engines prefer in your niche

- Schema alignment: Areas where structured data can strengthen recognition

- Brand consistency: Whether or not your name, messaging, and entities are consistent across channels

The goal isn’t just to measure visibility. You’ll also need to direct resources toward strengthening your brand’s footprint in generative discovery.

Common Pitfalls CMOs Face With These New KPIs

Adopting AI discoverability scorecards isn’t without challenges.

Here are mistakes to avoid:

- Focusing only on Google: If you overlook Perplexity, Bing Copilot, or ChatGPT, you’ll have blind spots in your visibility.

- Measuring without benchmarking: Raw citation numbers mean little without competitor comparisons.

- Treating citations as static: Citations change weekly as models update. A snapshot isn’t enough; you need trends.

- Ignoring context: Not all citations are equal. You don’t want negative or inaccurate citations, which would actually be a detriment to your brand reputation.

- Overcomplicating dashboards: Too many metrics confuse the C-suite. A scorecard should highlight only what drives decisions.

Building the Dashboard for CMOs

A CMO’s dashboard must now include both traditional SEO and AI discoverability KPIs.

Rankings, traffic, and conversions still matter, but they’re incomplete.

Without citation share, engine coverage, and attribution quality, you can’t see the full impact of your content in an AI-driven landscape.

By adopting discoverability scorecards, CMOs can stay ahead of competitors and ensure their brands are visible where buying decisions increasingly begin…inside generative answers.

The Future of AI Discoverability KPIs

The scorecards you build today are just the starting point.

Over the next one-to-three years, generative engines will expand how they surface and attribute content. When this happens, new KPIs will enter the picture.

- Cross-platform discoverability: Customers will consult multiple engines before making a decision. How consistently your brand appears across ChatGPT, Perplexity, Google AI Overviews, Bing Copilot, and newer entrants will become as important as market share in traditional industries.

- Voice-driven interfaces: As generative AI integrates into voice assistants, visibility will extend to spoken results. Future scorecards will need to capture how often answers delivered through voice channels include your brand.

- Multimodal citations: AI systems are beginning to generate not just text, but also a range of formats like images, video summaries, and interactive charts. Brands will need to monitor whether their visuals, logos, and multimedia content are being used or attributed correctly.

- Trust and sentiment signals: It won’t be enough to count citations. Future KPIs will need to measure how the brand is framed (positively, neutrally, or negatively) to give a true picture of discoverability.

The takeaway: CMOs should treat today’s AI discoverability scorecards as a living framework.

What matters most is to avoid locking into one static model. Aim to build out an ability to adapt as generative engines evolve.

Closing: From Search to Generative Visibility

Search is evolving, and measurement has to evolve with it.

AI discoverability scorecards give CMOs the framework to benchmark, compare, and act on the most important metric of tomorrow: visibility in generative engines.

Brands that build these scorecards now will be the ones shaping the competitive landscape in the AI era.

Need help sorting all of these metrics and targeting out for AEO and GEO?

Check out our AI Discoverability Services to see how we can help, and contact us to get started when you’re ready to roll.

Frequently Asked Questions About AI Scorecards

Tommy Landry

Latest posts by Tommy Landry (see all)

- AI -Driven Discoverability Presentation at AIMA (January 2026, Full Video) - February 17, 2026

- Why Local SEO Transfers to AI When Most SEO Tactics Don’t - February 10, 2026

- The Great Decoupling of Search - January 13, 2026